The issue with SEO in SPAs

The issue with SEO in SPAs has to do with where the page gets built or rendered. The rise of client-side SPA frameworks like React.js, Vue.js and others caused an issue I didn't encounter before with server-side frameworks. In short, there are two ways of rendering a page. Traditionally, the page gets rendered on the server. As a result, bots like GoogleBot will visit your page, read the content and meta tags of the page and use that information for Googles search engine. SPA frameworks work a little different. Normally, a blank page is loaded. The framework will build the entire page client-side as soon as the JavaScript bundle is loaded. Besides potential performance issues, this raised a new problem. Bots will visit a page, find a blank page and return that information.

So is Google just ignoring this?

Nope, they are trying their best to deal with this issue. As you can read here, Google can execute JavaScript to build the page and then get the content and metadata. So, problem fixed? Well, not entirely. They mention that sometimes things don't go perfectly during rendering, which may negatively impact search results for your site. Google is providing tools for you to debug and see what causes issues.

Building applications for the web is hard enough.

Do I want to deal with this?

Well, no. Building applications for the web is hard enough. At the start, I mentioned that I wanted to solve this issue with modern tools and techniques I love to use. Let's pick React.js as the SPA framework. I love to use React.js in combination with Next.js to solve many of the issues with SPA frameworks. You can read up on that in my article on Implementing the latest web technologies to boost the Mirabeau blog.

I will leverage the solution that Next.js offers regarding server-side rendering. This solves the issue in the old fashioned way. The page will be rendered on the server, GoogleBot will get the content and metadata and I will have solved my problem.

Taking it a bit further

I can call it a day now, but let's look at how I took it a bit further when building the the Mirabeau blog. The content, semantically written, is received by the GoogleBot. The metadata implementation needs to be done by me. In theory, it is simple. I have a set of basic meta tags that I update for every page. An article page may use the title and description of the article while the home page uses the name of the blog and the tagline.

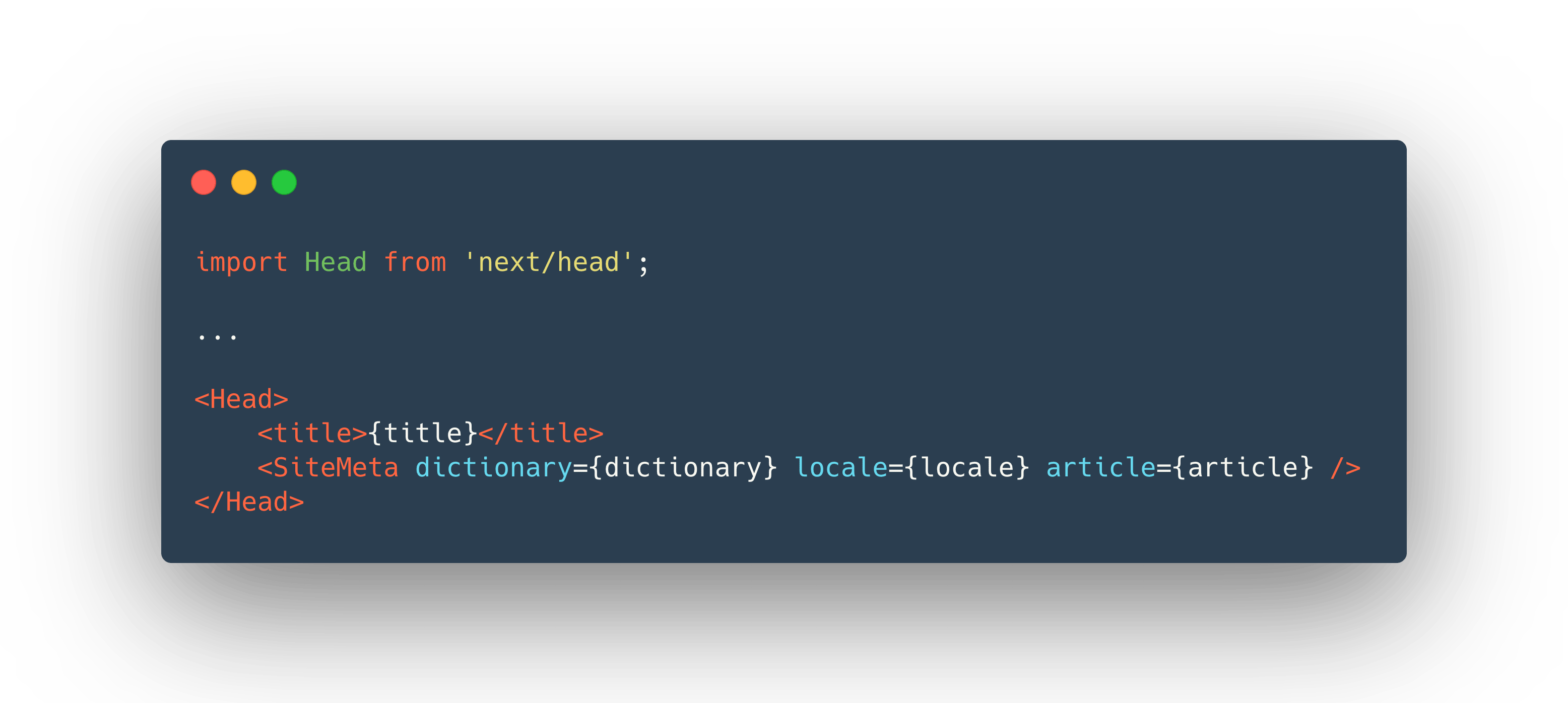

In the case of Next.js, I load a component called SiteMeta the head element of the page using the Next.js head component like so:

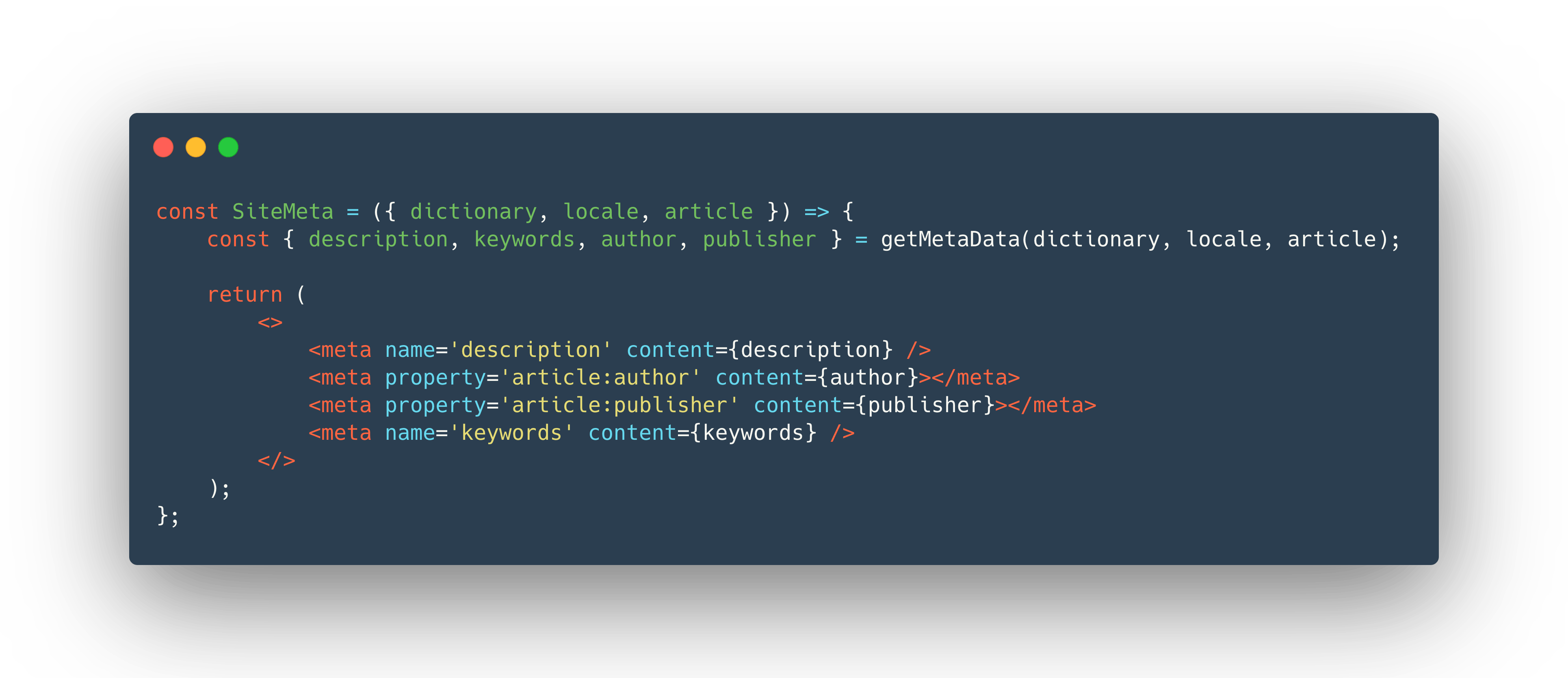

For an article, for instance, I set the title tag to the title of the article. I then load the SiteMeta component that sets a couple more tags. This is already starting to look more like it. The meta tags are being added dynamically based on the article and locale. Here you can see a simplified version:

Taking it even further by taking social media into account

Social media is vital for the reach of your blog. Naturally, we need to spend some time here to have the best chance of getting people to click the link to the article.

OpenGraph

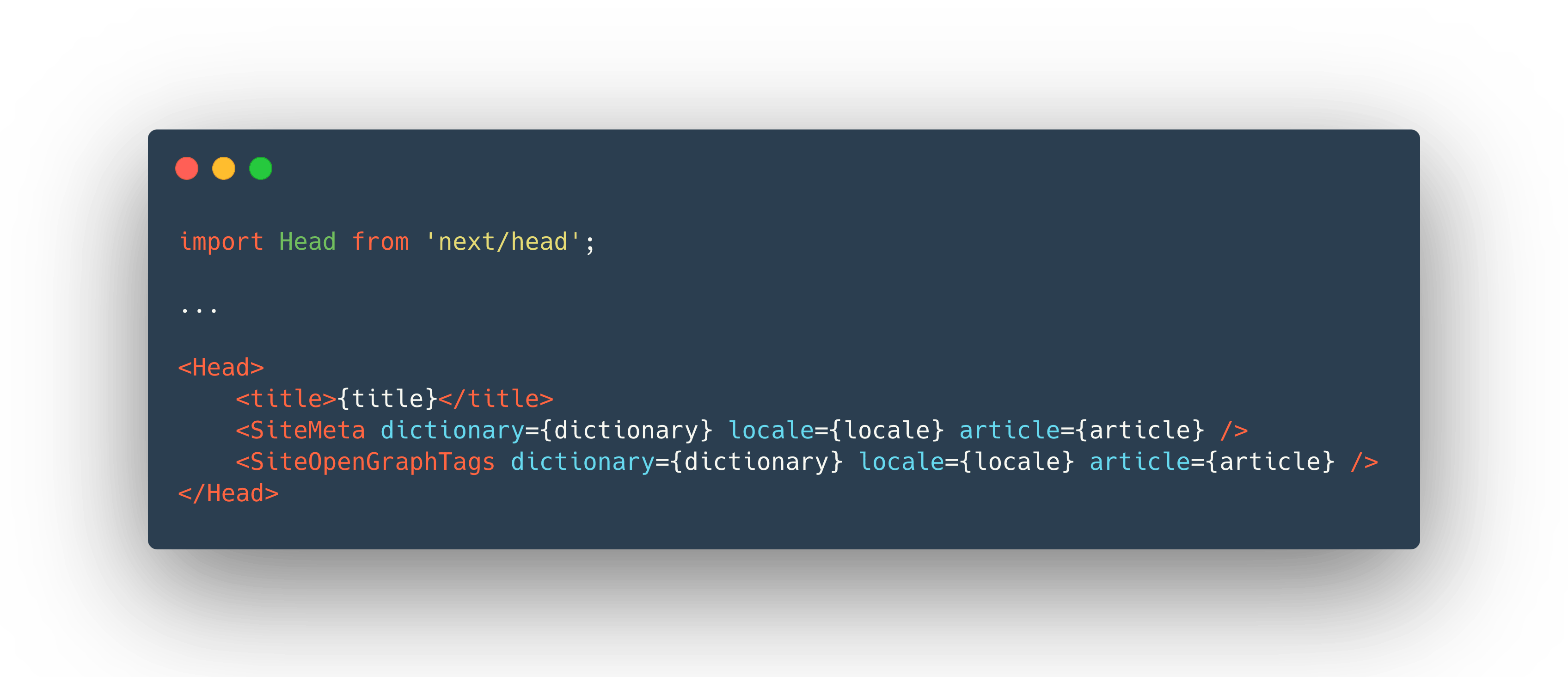

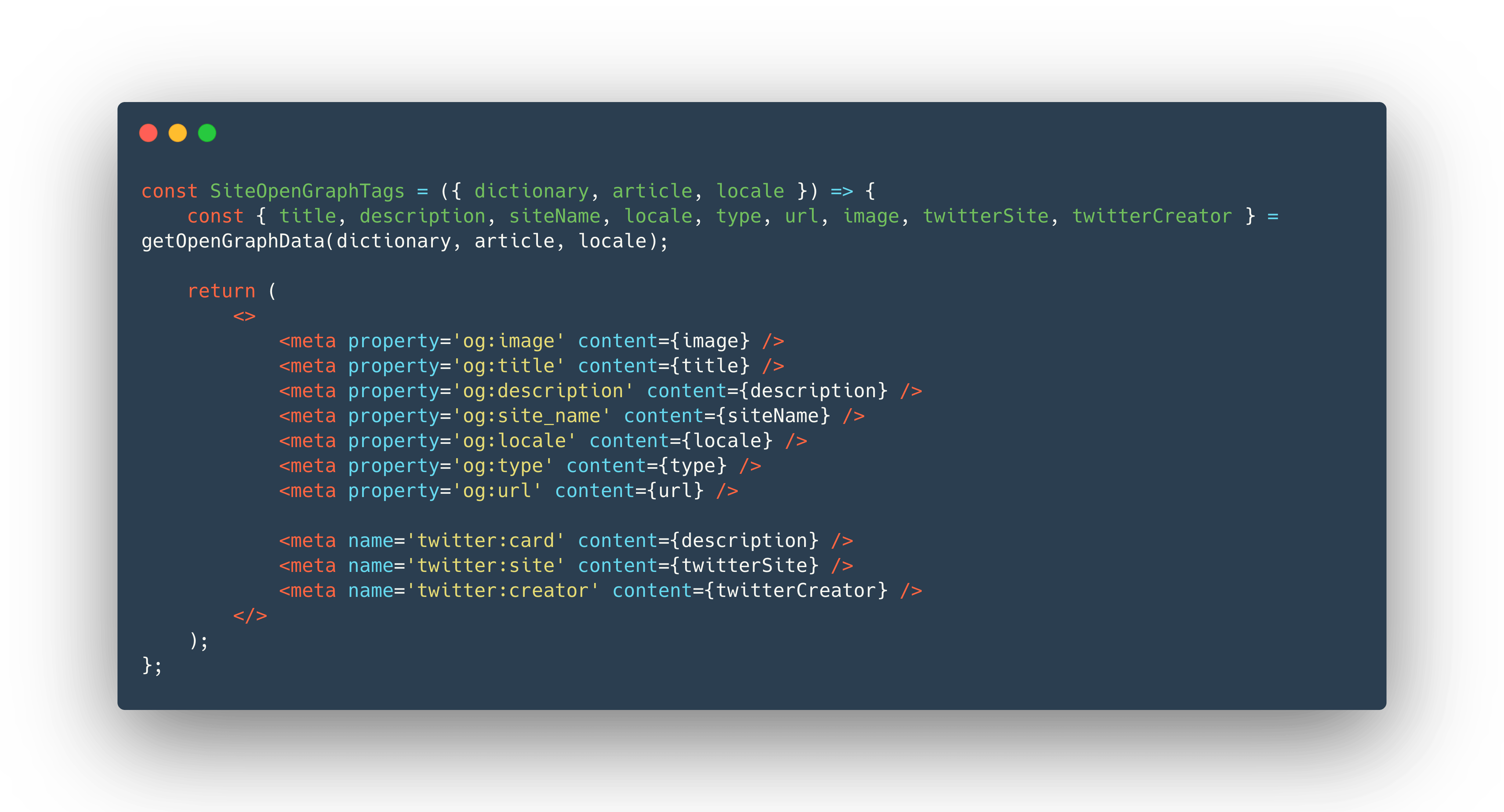

I can specify the page even further by using standards with Open Graph. It’s a protocol that is widely supported by all major social media websites and crawlers. I use Open Graph to create semantical tags for SEO and sharing. For example, I can provide a title, image and summary for an article page specifically aimed towards social media sites. I now load a second component called SiteOpenGraphTags in the head element.

Here we can set additional tags according to the Open Graph specification. Below you find a simplified version that for instance sets tags specific for Twitter cards.

The result

When I share an article to social media I can now see that it shows a custom title, description and image that I can set in the CMS used. A social media card might look something like:

Case: Lely Used

Sure, a blog is relatively simple. Often, we need to put in a bit more work. Lely used is a platform I build similarly. The platform consists of 'content pages', a product search page and product detail pages. The goal is to sell products. As a result, detail pages are often shared on social media platforms. The implementation for many of the pages is the same, but as an article page on a blog, there is a product page on an e-commerce platform. I looked into setting dynamic tags specific to a product. Below you can see the response of a product detail page.

I've added quite a bit of information in this card. You can see the:

- platform

- model

- location

- build-year

- localised currency and amount (price)

- specifications

- image of product

- content localised based on locale in link

These tags are being used both for sharing the product and by bots like GoogleBot.

Looking back

SPA frameworks have an impact on SEO. Although Google is working hard an this, there are still several issues. I can solve all issues by using server-side rendering with a framework like Next.js. I can use React.js to dynamically load regular meta tags and Open Graph tags in the head element of the page. I can then fully customise the tags based on the what kind of page it is. This is my current way of handling SEO with SPA frameworks. What is yours?